In recent years, machine learning (ML) has emerged as a transformative force across industries, from finance to healthcare. However, the journey from data to deployed models is fraught with challenges. This is where Machine Learning Operations (MLOps) comes into play. MLOps is a set of practices that aim to streamline the development and deployment of ML models, bridging the gap between data scientists and IT operations.

By automating and standardizing the ML lifecycle, MLOps enables organizations to deploy models faster, improve model performance, and enhance collaboration among teams. This guide delves into the intricacies of MLOps, exploring its core components, benefits, challenges, and best practices. Understanding MLOps is crucial for organizations looking to harness the full potential of machine learning.

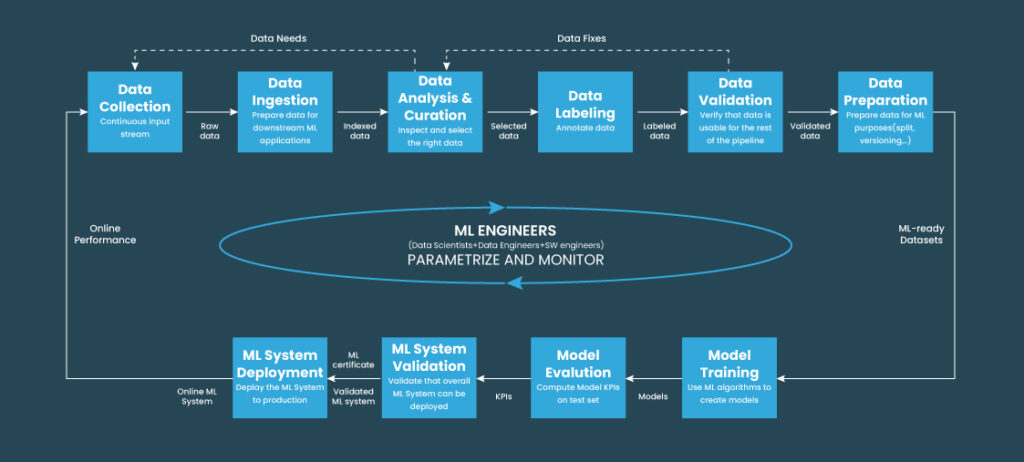

The MLOps Lifecycle

The MLOps lifecycle encompasses the entire journey of a machine learning model, from its inception to production and beyond. It involves a series of interconnected stages that require collaboration between data scientists, engineers, and IT operations.

Data Engineering

The foundation of any ML project is high-quality data. Data engineering involves collecting, cleaning, and preparing data for model training. This stage includes:

- Data Ingestion: Gathering data from various sources such as databases, APIs, and files.

- Data Cleaning: Handling missing values, outliers, and inconsistencies to ensure data quality.

- Data Transformation: Converting raw data into a suitable format for model training.

- Feature Engineering: Creating new features from existing data to improve model performance.

Model Development and Training

Data scientists build and train machine learning models using various algorithms and techniques. This stage involves:

- Model Selection: Choosing the appropriate algorithm based on the problem and data.

- Model Training: Feeding the prepared data into the model to learn patterns and relationships.

- Hyperparameter Tuning: Optimizing model performance by adjusting hyperparameters.

- Model Evaluation: Assessing model performance using metrics like accuracy, precision, recall, and F1-score.

Model Deployment

Once a model is trained and validated, it’s deployed to a production environment. This stage involves:

- Model Serving: Integrating the model into a production system to make predictions.

- Infrastructure Provisioning: Setting up the necessary hardware or cloud resources.

- Containerization: Packaging the model and its dependencies into containers for easy deployment.

- Continuous Integration and Continuous Delivery (CI/CD): Automating the deployment process to ensure consistency and efficiency.

Model Monitoring and Retraining

Deployed models require continuous monitoring to assess their performance and identify issues. This stage involves:

- Performance Metrics: Tracking key performance indicators (KPIs) to evaluate model accuracy and drift.

- Anomaly Detection: Identifying unexpected changes in model behavior.

- Retraining: Updating the model with new data to maintain performance.

- Model Versioning: Managing different versions of models for experimentation and rollback.

Building a Strong MLOps Culture

A successful MLOps implementation requires more than just technology; it demands a cultural shift within the organization. Fostering a collaborative environment where data scientists, engineers, and business stakeholders work together is essential.

Breaking Down Silos

Traditional organizational structures often create silos between data scientists, engineers, and business units. MLOps thrives on collaboration. By breaking down these silos, organizations can facilitate knowledge sharing, improve communication, and accelerate the ML lifecycle.

Emphasizing Continuous Learning and Experimentation

MLOps is an evolving field. Encouraging a culture of experimentation and learning is crucial for staying ahead. Employees should be encouraged to explore new tools, techniques, and approaches to improve ML processes.

Prioritizing Data Quality

High-quality data is the foundation of successful ML models. Organizations should invest in data governance and quality initiatives to ensure data accuracy, consistency, and reliability.

Building a Data-Driven Culture

A data-driven culture is essential for MLOps success. Employees at all levels should be empowered to use data to make informed decisions. This involves providing data literacy training and access to data visualization tools.

Measuring MLOps Success

Defining and tracking key performance indicators (KPIs) is crucial for evaluating the effectiveness of MLOps initiatives. Metrics such as model deployment frequency, mean time to deployment, and model accuracy can be used to assess progress.

Fostering a DevOps Mindset

Adopting DevOps principles can enhance MLOps practices. By focusing on automation, collaboration, and continuous improvement, organizations can streamline the ML lifecycle and deliver value faster.

By cultivating a strong MLOps culture, organizations can create an environment where machine learning can thrive and deliver tangible business benefits.

Overcoming Common MLOps Challenges

Implementing MLOps is not without its challenges. Addressing these hurdles is crucial for successful ML initiatives.

Data Challenges

- Data Quality: Ensuring data accuracy, completeness, and consistency remains a significant challenge. Investing in robust data cleaning and preprocessing pipelines is essential.

- Data Drift: Model performance can degrade over time due to changes in data distribution. Implementing monitoring and retraining mechanisms is crucial.

- Data Privacy and Security: Protecting sensitive data while enabling model training requires careful consideration of privacy regulations and security measures.

Model Management Challenges

- Model Complexity: Managing complex models with numerous hyperparameters can be daunting. AutoML tools and techniques can help streamline this process.

- Model Explainability: Understanding how models arrive at decisions is essential for trust and compliance. Explainable AI techniques are gaining importance.

- Model Bias: Addressing bias in data and models is crucial for fairness and ethical considerations. Regular monitoring and mitigation strategies are necessary.

Infrastructure and Deployment Challenges

- Scalability: Handling varying workloads and model updates requires flexible infrastructure. Cloud-based solutions often provide the necessary scalability.

- Cost Optimization: Managing the computational costs associated with ML workloads is essential. Optimizing resource utilization and leveraging cost-effective cloud services is crucial.

- Deployment Automation: Automating the deployment process helps reduce errors and accelerates time-to-market.

Organizational Challenges

- Talent Gap: Finding skilled MLOps professionals can be challenging. Organizations need to invest in training and development.

- Cultural Change: Fostering a data-driven and collaborative culture is essential for MLOps success.

- Measuring MLOps Success: Defining and tracking relevant metrics is crucial for demonstrating the value of MLOps initiatives.

The Future of MLOps: Trends and Predictions

The MLOps landscape is evolving rapidly, driven by technological advancements and changing business needs. Several trends are shaping the future of MLOps:

AutoML and Low-Code/No-Code MLOps

AutoML platforms are making machine learning more accessible to a broader audience. These platforms automate many aspects of the ML lifecycle, including data preprocessing, feature engineering, model selection, and hyperparameter tuning. Low-code/no-code MLOps tools will further democratize machine learning by allowing business users to build and deploy models without extensive coding knowledge.

Edge Computing and MLOps

Edge computing is gaining traction, bringing computation and data storage closer to the data source. This trend will require new MLOps strategies for deploying and managing models at the edge.

MLOps for Real-Time Applications

Real-time applications, such as fraud detection and recommendation systems, demand low-latency inference. MLOps will focus on optimizing model deployment and inference for real-time environments.

Responsible AI and MLOps

As AI becomes more pervasive, ethical considerations will become increasingly important. MLOps will incorporate practices for ensuring fairness, transparency, and accountability in ML models.

Integration of MLOps with DevOps and DataOps

The convergence of MLOps, DevOps, and DataOps will create a cohesive and efficient data-driven pipeline. This integration will foster collaboration and streamline the entire data-to-value process.

MLOps and the Metaverse

The emerging metaverse will present new opportunities and challenges for MLOps. Managing ML models in decentralized virtual environments will require innovative approaches.

By staying informed about these trends, organizations can prepare for the future of MLOps and gain a competitive advantage.

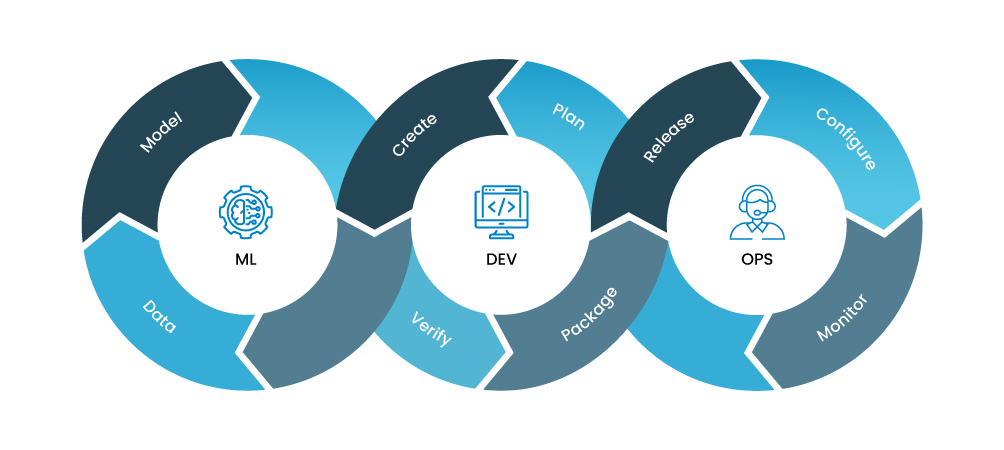

MLOps and DevOps: A Synergistic Relationship

MLOps and DevOps share a common goal: to streamline and automate processes for faster, more reliable delivery. While DevOps focuses on software development and IT operations, MLOps specifically addresses the unique challenges of machine learning models.

By combining the principles of DevOps with MLOps, organizations can achieve a seamless workflow from data ingestion to model deployment and monitoring. This synergy accelerates time-to-market, improves model performance, and ensures operational efficiency.

Key areas of overlap between MLOps and DevOps include:

- Continuous Integration and Continuous Delivery (CI/CD): Automating the build, test, and deployment of both software and ML models.

- Infrastructure as Code (IaC): Managing infrastructure using code for both IT and ML environments.

- Version Control: Tracking changes to code, data, and models for reproducibility and collaboration.

- Monitoring and Logging: Implementing robust monitoring systems to track the performance of both software and ML models.

By adopting a unified approach to MLOps and DevOps, organizations can break down silos, improve collaboration, and deliver better business outcomes.

Want to delve deeper into MLOps and DevOps? We are talking here.

Conclusion

MLOps is no longer a buzzword; it’s a business imperative. By streamlining the machine learning lifecycle, MLOps empowers organizations to deploy models faster, improve model performance, and drive better business outcomes. As the field of machine learning continues to evolve, MLOps will play an increasingly critical role in unlocking the full potential of AI.

To succeed in the era of AI, organizations must prioritize MLOps and invest in the necessary tools, technologies, and talent. By adopting a holistic approach that encompasses data engineering, model development, deployment, monitoring, and retraining, businesses can build a robust and scalable ML infrastructure.

At Codersperhour, we specialize in helping organizations implement and optimize their MLOps practices. Our expertise in data engineering, machine learning, and DevOps enables us to deliver tailored solutions that drive business value. Contact us today to learn how we can help you harness the power of MLOps.