In the realm of Artificial Intelligence (AI), particularly Natural Language Processing (NLP), a new technique is emerging that promises to bridge the gap between factual accuracy and creative text generation. This technique is called Retrieval-Augmented Generation, or RAG. Unlike traditional Large Language Models (LLMs) that rely solely on their internal training data, RAG integrates information retrieval with generative capabilities, producing more grounded and reliable outputs.

Understanding the Limitations of LLMs

Large Language Models have become a cornerstone of various AI applications, from text summarization to code generation. However, a significant limitation of LLMs is their inherent bias and potential for factual inaccuracies. Since LLMs are trained on massive datasets of text and code, they can perpetuate biases and factual errors present within that data. Additionally, LLMs often lack the ability to access and process external information, hindering their ability to ground their outputs in real-world knowledge.

How Retrieval-Augmented Generation Works

Retrieval-Augmented Generation (RAG) might sound complex, but it boils down to a powerful collaboration between two key components. Let’s peek under the hood and see how RAG works its magic:

- Information Retrieval: In the first stage, RAG utilizes a retrieval component to query external knowledge sources, such as databases or knowledge graphs, based on the user’s input. This retrieval component leverages information retrieval techniques to find the most relevant and reliable information related to the user’s prompt.

Imagine a vast library – this represents the information retrieval component of RAG. When a user submits a query, RAG doesn’t just rely on its own internal knowledge (like a traditional LLM). Instead, it acts like a skilled librarian, diligently searching this external library for relevant and reliable information. This information retrieval can involve sophisticated techniques like querying databases, knowledge graphs, or even specialized search engines. The goal is to identify the most pertinent data that directly addresses the user’s specific prompt.

- Augmented Generation: In the second stage, the retrieved information is fed into the LLM alongside the user’s original input. The LLM then utilizes this combined information to generate its response. This allows the LLM to leverage its inherent text-generation capabilities while grounding its output in the retrieved factual data.

Think of this stage as having a master storyteller—the Large Language Model (LLM). The retrieved information, along with the user’s original query, is presented to the LLM. This combined knowledge becomes the LLM’s “ingredients” for crafting its response. The LLM, using its advanced language processing capabilities, analyzes these ingredients and weaves them together to generate a response that is not only relevant to the user’s prompt but also grounded in the factual data retrieved in Stage 1.

Benefits of Retrieval-Augmented Generation

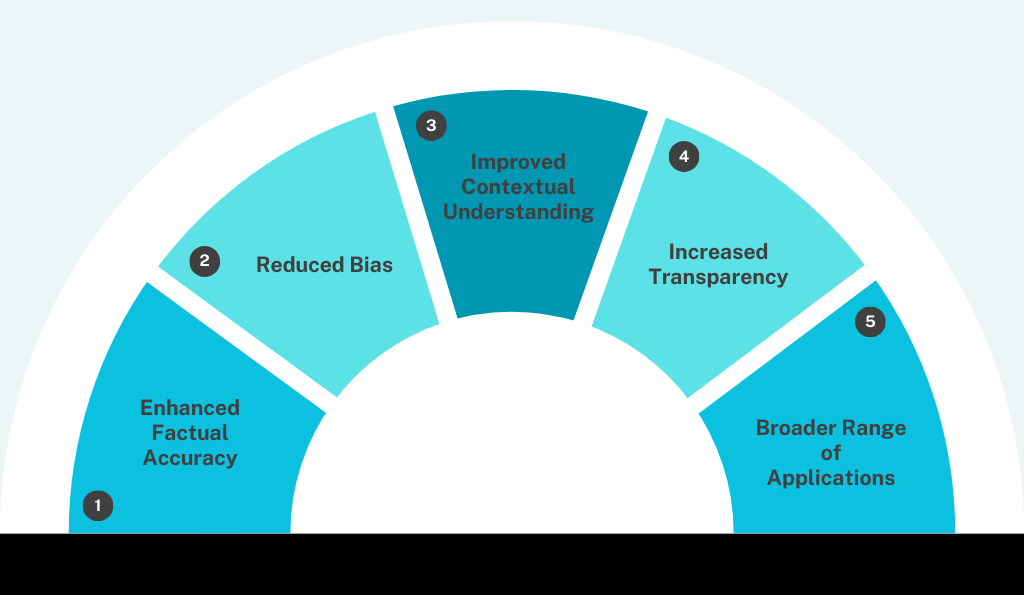

The integration of information retrieval with text generation offers several advantages:

- Enhanced Factual Accuracy: Traditional LLMs are trained on massive amounts of text data, but this data can contain biases and inaccuracies. RAG tackles this issue by incorporating information retrieval. In the first stage, RAG searches external knowledge sources like databases or knowledge graphs to find the most relevant and reliable information related to the user’s query. This retrieved information is then fed into the LLM, allowing it to generate outputs grounded in factual data, resulting in significantly more accurate and trustworthy results.

- Reduced Bias: LLMs trained on biased data can perpetuate those biases in their outputs. RAG helps mitigate this by accessing external knowledge sources that are curated and vetted for objectivity. By incorporating this unbiased information, RAG can generate responses that are fairer and less likely to reflect prejudices present in the LLM’s training data.

- Improved Contextual Understanding: LLMs often struggle to grasp the full context of a user’s query. Here’s where RAG shines. By leveraging retrieved information during the generation process, RAG can understand the specific context surrounding the user’s prompt. This allows RAG to generate responses that are more relevant, informative, and tailored to the user’s needs. Imagine a chatbot trained on generic customer service interactions. With RAG, the chatbot can access product manuals or knowledge bases specific to the customer’s inquiry, leading to more helpful and accurate responses.

- Increased Transparency: One of the challenges with traditional LLMs is the lack of explainability – it’s often difficult to understand the reasoning behind their outputs. RAG offers a degree of transparency by virtue of its two-stage process. By identifying the retrieved information used to inform the final output, RAG allows users to understand the data that shaped the response. This transparency fosters trust and allows for further refinement of the AI model.

- Broader Range of Applications: The improved accuracy, reduced bias, and contextual understanding offered by RAG unlock a wider range of applications for AI technology. RAG can be used to create more informative chatbots, develop supercharged search engines with contextually relevant results, generate high-quality content tailored to specific needs, and power advanced question-answering systems that provide comprehensive and accurate information.

Real-World Applications of RAG

Retrieval-Augmented Generation (RAG) isn’t just a fancy term; it’s a powerful AI technique poised to revolutionize how we interact with machines. By bridging the gap between information retrieval and text generation, RAG opens doors to exciting real-world applications across various sectors. Here’s a glimpse into how RAG can transform various aspects of our lives:

- Supercharged Search Engines: Imagine a search engine that not only delivers relevant keywords but also understands the context behind your queries. With RAG, search results can be significantly enhanced. By retrieving information from authoritative sources and incorporating it into search rankings, RAG can ensure users find the most accurate and pertinent results for their specific needs.

- Enhanced Chatbots and Virtual Assistants: Frustrated with generic chatbot responses? RAG can help! By allowing chatbots to access and leverage external knowledge bases during interactions, RAG empowers them to deliver more informative and helpful responses. Imagine a customer service chatbot that can access product manuals or warranty information in real-time, leading to a smoother and more efficient customer experience.

- Content Creation on Steroids: Content creation across various industries can benefit greatly from RAG. RAG can be used to generate high-quality content like informative blog posts, product descriptions, or even personalized marketing materials. By incorporating retrieved data and industry-specific knowledge, RAG can ensure the content is not only factually accurate but also tailored to the target audience.

- Advanced Question Answering Systems: RAG has the potential to revolutionize question-answering systems. Instead of relying solely on pre-programmed responses, RAG allows these systems to access and process information from external sources. This leads to more accurate and comprehensive answers to complex factual queries. Think of a virtual assistant that can not only answer basic questions but also delve deeper by retrieving relevant research papers or expert opinions.

- Redefining Education and Training: The ability of RAG to access and process information from various sources can be a game-changer in education and training. Imagine personalized learning experiences where students can interact with AI tutors that can tailor their responses based on the student’s specific needs and areas of focus. Additionally, RAG can be used to create interactive learning materials that provide factual information alongside engaging content.

Beyond these examples, the potential applications of RAG are vast and constantly evolving. As research continues, we can expect to see RAG integrated into various domains, from healthcare and finance to legal research and scientific exploration. The ability of RAG to provide contextually aware and factually accurate outputs makes it a powerful tool for unlocking the true potential of AI in the real world.

The Future of Retrieval-Augmented Generation

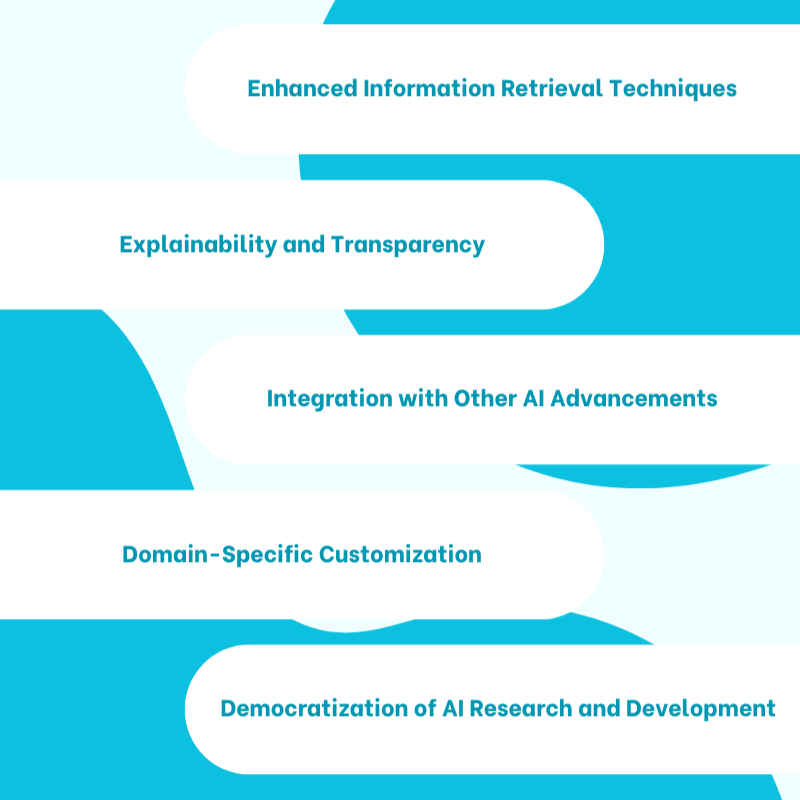

Retrieval-Augmented Generation (RAG) has emerged as a game-changer in the realm of AI, transforming how machines process information and generate text. But what does the horizon hold for RAG? Let’s delve into some of the exciting possibilities:

1. Enhanced Information Retrieval Techniques: One crucial area of focus is improving the information retrieval component of RAG. Current research explores advanced techniques like semantic search and knowledge graph reasoning to ensure retrieved data is not just relevant but also highly accurate and reliable. This will further enhance the factual grounding of RAG’s outputs.

2. Explainability and Transparency: While RAG offers some level of explainability by revealing the retrieved information used, further advancements are needed. Future research will likely focus on developing methods that allow users to understand the weighting RAG assigns to different pieces of retrieved data during the generation process. This will foster trust and enable users to better evaluate the reasoning behind RAG’s outputs.

3. Integration with Other AI Advancements: The future of RAG lies not in isolation but in its ability to synergize with other cutting-edge AI developments. Imagine RAG integrated with advancements in natural language understanding (NLU) – this would allow for a more nuanced comprehension of user queries, leading to even more contextually relevant responses. Additionally, combining RAG with advancements in reasoning and commonsense knowledge could enable machines to generate not just factually accurate responses but also ones that are logically sound and grounded in real-world understanding.

4. Domain-Specific Customization: Currently, RAG relies on general-purpose information retrieval techniques. The future holds promise for the development of domain-specific RAG models. These models would be trained on data and knowledge bases specific to a particular domain (e.g., healthcare, finance, law). This would allow RAG to generate outputs that are not only factually accurate but also tailored to the specific terminology and nuances of that domain, leading to even more sophisticated applications.

5. Democratization of AI Research and Development: RAG’s modular architecture, with its distinct information retrieval and text generation components, has the potential to democratize AI research and development. By allowing researchers to focus on improving specific components, the development cycle could be accelerated. Additionally, the open-source nature of some RAG implementations could foster collaboration and innovation within the broader AI community.

In conclusion, the future of Retrieval-Augmented Generation is brimming with exciting possibilities. As research progresses, we can expect RAG to become even more sophisticated, enabling AI applications to deliver unprecedented levels of accuracy, context-awareness, and human-like understanding. This paves the way for a future where AI seamlessly integrates into our lives, empowering us in various ways, from enhancing our learning experiences to revolutionizing the way we interact with technology.

Generative AI With Codersperhour

Codersperhour’s Generative AI service offers a secure and reliable platform for businesses to explore the potential of RAG. Customer data remains confidential – it’s not shared with external providers and custom models are isolated for exclusive use.

With Codersperhour’s secure and scalable AI services, businesses can experiment with RAG and explore its potential to enhance various aspects of their operations. Whether it’s generating informative content, creating more efficient chatbots, or developing robust question-answering systems, RAG offers unparalleled contextual understanding for real-world applications.